AI Integration | Nov 10, 2025

Real-Time Voice AI Agent with RAG

Author

Teddy Gyabaah

Real-Time Voice AI Agent with RAG

An intelligent voice receptionist for SMBs, powered by real-time AI and enterprise knowledge retrieval.

Overview

I built a real-time voice AI agent that lets businesses offer instant, conversational support to their customers — without human intervention.

Users can speak naturally, and the agent replies in real-time, grounded in the company's own documentation (not the open internet).

Use case: A plumber, lawyer, or HVAC service can deploy this as a 24/7 AI receptionist trained on their materials — answering calls, quoting services, and helping customers instantly.

Goal: Bring LLM-powered conversation to real-world businesses, with reliable, knowledge-grounded answers.

Tech Architecture

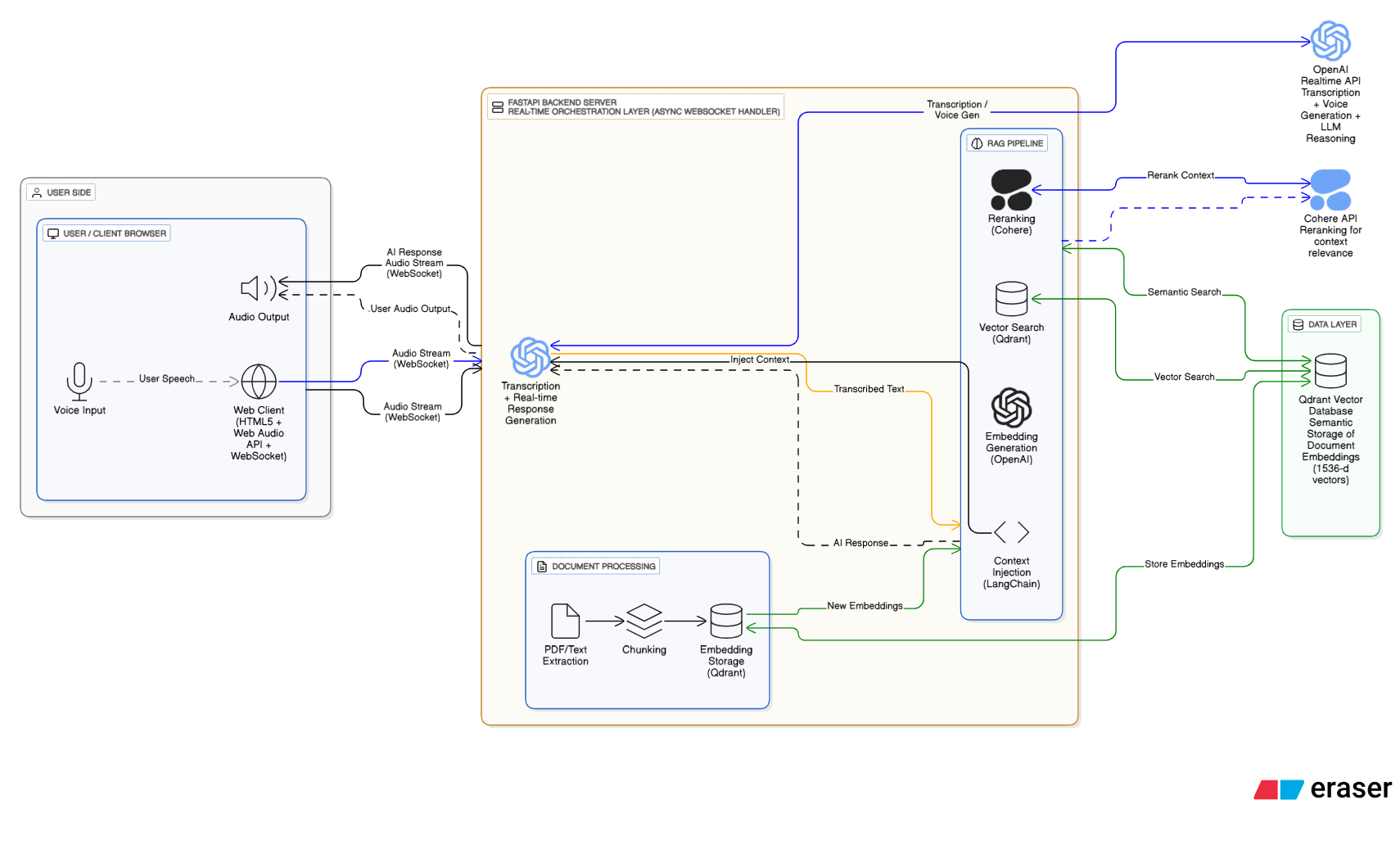

Stack: FastAPI · OpenAI Realtime API · Qdrant · Cohere · LangChain · WebSocket · Python (AsyncIO)

How it works:

1.User speaks → When a user speaks into their device, the audio is captured and streamed in real-time via WebSocket connection to the backend server, enabling low-latency bidirectional communication.

2.OpenAI Realtime API → The incoming audio stream is processed by OpenAI's Realtime API, which handles both speech-to-text transcription and generates intelligent responses using advanced language models, all happening in real-time without noticeable delays.

3.RAG pipeline → To ensure accurate, company-specific answers, a Retrieval-Augmented Generation pipeline searches through the business's documentation. Using Qdrant for vector similarity search and Cohere's reranker, the system identifies the most relevant information chunks that match the user's query.

4.Context injection → The retrieved document chunks are injected as context into the LLM's prompt, ensuring that every response is grounded in the company's actual documentation rather than generic knowledge, maintaining accuracy and relevance.

5.Audio output → The LLM's text response is converted back to natural-sounding speech using text-to-speech synthesis, and the audio is streamed back to the user's browser in real-time, creating a seamless conversational experience.

System Flow:

Results & Impact

Performance Metrics

| Metric | Value |

|---|---|

| Latency | < 500ms response time |

| Accuracy | 95%+ for domain-specific queries |

Potential Business Value

- 24/7 Availability: No need for human receptionists around the clock

- Cost Reduction: Significant savings on customer service overhead

- Consistent Quality: Every customer gets accurate, consistent information

- Scalability: Handle multiple calls simultaneously without additional resources

Use Cases That Could be Enabled

- Service Businesses: Plumbers, electricians, HVAC services can quote and schedule instantly

- Professional Services: Lawyers, consultants can provide initial consultations

- Healthcare: Appointment scheduling and basic information queries

- Retail: Product information and availability checking